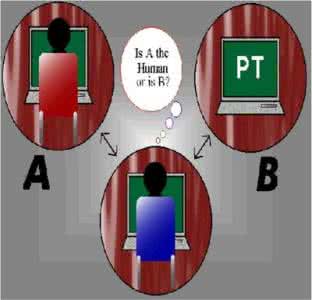

Large language models (LLMs) are increasingly used in the social sciences to simulate human behavior, based on the assumption that they can generate realistic, human-like text. Yet this assumption remains largely untested. Existing validation efforts rely heavily on human-judgment-based evaluations -- testing whether humans can distinguish AI from human output -- despite evidence that such judgments are blunt and unreliable. As a result, the field lacks robust tools for assessing the realism of LLM-generated text or for calibrating models to real-world data. This paper makes two contributions. First, we introduce a computational Turing test: a validation framework that integrates aggregate metrics (BERT-based detectability and semantic similarity) with interpretable linguistic features (stylistic markers and topical patterns) to assess how closely LLMs approximate human language within a given dataset. Second, we systematically compare nine open-weight LLMs across five calibration strategies -- including fine-tuning, stylistic prompting, and context retrieval -- benchmarking their ability to reproduce user interactions on X (formerly Twitter), Bluesky, and Reddit. Our findings challenge core assumptions in the literature. Even after calibration, LLM outputs remain clearly distinguishable from human text, particularly in affective tone and emotional expression. Instruction-tuned models underperform their base counterparts, and scaling up model size does not enhance human-likeness. Crucially, we identify a trade-off: optimizing for human-likeness often comes at the cost of semantic fidelity, and vice versa. These results provide a much-needed scalable framework for validation and calibration in LLM simulations -- and offer a cautionary note about their current limitations in capturing human communication.

翻译:大型语言模型(LLMs)在社会科学中日益被用于模拟人类行为,其基础假设是它们能够生成逼真、类人的文本。然而,这一假设在很大程度上尚未得到验证。现有的验证工作主要依赖于基于人类判断的评估——即测试人类能否区分AI与人类输出——尽管有证据表明此类判断是粗略且不可靠的。因此,该领域缺乏评估LLM生成文本真实性或根据真实世界数据校准模型的稳健工具。本文做出两项贡献。首先,我们引入了一种计算图灵测试:一个验证框架,该框架整合了聚合指标(基于BERT的可检测性和语义相似性)与可解释的语言特征(风格标记和主题模式),以评估LLMs在给定数据集中对人类语言的逼近程度。其次,我们系统比较了九种开源权重的LLMs在五种校准策略下的表现——包括微调、风格提示和上下文检索——并以它们在X(前身为Twitter)、Bluesky和Reddit上复现用户交互的能力为基准。我们的研究结果挑战了文献中的核心假设。即使经过校准,LLM的输出仍与人类文本明显可区分,尤其在情感基调和情绪表达方面。指令调优模型的表现逊于其基础版本,且扩大模型规模并未增强类人性。关键的是,我们发现了一种权衡:优化类人性往往以牺牲语义保真度为代价,反之亦然。这些结果为LLM模拟中的验证与校准提供了一个亟需的可扩展框架,并对其当前在捕捉人类交流方面的局限性提出了警示。