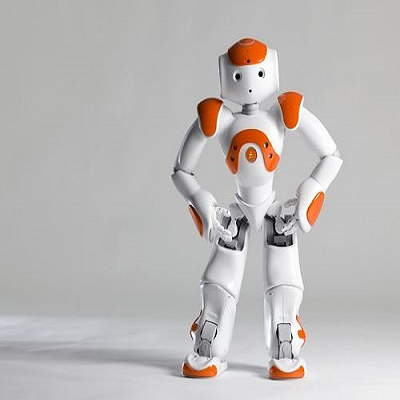

Enabling humanoid robots to follow free-form language commands is critical for seamless human-robot interaction, collaborative task execution, and general-purpose embodied intelligence. While recent advances have improved low-level humanoid locomotion and robot manipulation, language-conditioned whole-body control remains a significant challenge. Existing methods are often limited to simple instructions and sacrifice either motion diversity or physical plausibility. To address this, we introduce Humanoid-LLA, a Large Language Action Model that maps expressive language commands to physically executable whole-body actions for humanoid robots. Our approach integrates three core components: a unified motion vocabulary that aligns human and humanoid motion primitives into a shared discrete space; a vocabulary-directed controller distilled from a privileged policy to ensure physical feasibility; and a physics-informed fine-tuning stage using reinforcement learning with dynamics-aware rewards to enhance robustness and stability. Extensive evaluations in simulation and on a real-world Unitree G1 humanoid show that Humanoid-LLA delivers strong language generalization while maintaining high physical fidelity, outperforming existing language-conditioned controllers in motion naturalness, stability, and execution success rate.

翻译:使仿人机器人能够遵循自由形式的语言指令,对于实现无缝的人机交互、协作任务执行以及通用具身智能至关重要。尽管近期研究在低层仿人运动与机器人操作方面取得了进展,但基于语言条件的全身控制仍是一个重大挑战。现有方法通常局限于简单指令,并在运动多样性或物理合理性方面有所牺牲。为此,我们提出了Humanoid-LLA,一种大型语言动作模型,能够将富有表现力的语言指令映射为仿人机器人可物理执行的全身动作。我们的方法整合了三个核心组件:一个统一运动词汇,将人类与仿人运动基元对齐至共享的离散空间;一个从特权策略蒸馏而来的词汇导向控制器,以确保物理可行性;以及一个基于物理信息的微调阶段,利用具有动力学感知奖励的强化学习来增强鲁棒性与稳定性。在仿真环境及真实世界的Unitree G1仿人机器人上进行的大量评估表明,Humanoid-LLA在保持高物理保真度的同时,展现出强大的语言泛化能力,在运动自然度、稳定性与执行成功率方面均优于现有的语言条件控制器。