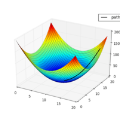

Hyperparameter tuning is one of the essential steps to guarantee the convergence of machine learning models. We argue that intuition about the optimal choice of hyperparameters for stochastic gradient descent can be obtained by studying a neural network's phase diagram, in which each phase is characterised by distinctive dynamics of the singular values of weight matrices. Taking inspiration from disordered systems, we start from the observation that the loss landscape of a multilayer neural network with mean squared error can be interpreted as a disordered system in feature space, where the learnt features are mapped to soft spin degrees of freedom, the initial variance of the weight matrices is interpreted as the strength of the disorder, and temperature is given by the ratio of the learning rate and the batch size. As the model is trained, three phases can be identified, in which the dynamics of weight matrices is qualitatively different. Employing a Langevin equation for stochastic gradient descent, previously derived using Dyson Brownian motion, we demonstrate that the three dynamical regimes can be classified effectively, providing practical guidance for the choice of hyperparameters of the optimiser.

翻译:超参数调优是保证机器学习模型收敛的关键步骤之一。我们认为,通过研究神经网络的相图可以获得关于随机梯度下降最优超参数选择的直观理解,其中每个相的特征在于权重矩阵奇异值的独特动力学行为。受无序系统启发,我们从以下观察出发:采用均方误差的多层神经网络的损失景观可被解释为特征空间中的无序系统,其中学习到的特征映射为软自旋自由度,权重矩阵的初始方差被解释为无序强度,而温度则由学习率与批大小的比值给出。在模型训练过程中,可识别出三个相,其中权重矩阵的动力学行为存在本质差异。利用先前通过Dyson布朗运动推导的随机梯度下降朗之万方程,我们证明这三种动力学机制可被有效分类,从而为优化器的超参数选择提供实用指导。