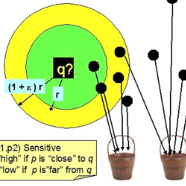

Many popular machine learning techniques in natural language processing and data mining rely heavily on high-quality text sources. However real-world text datasets contain a significant amount of spelling errors and improperly punctuated variants where the performance of these models would quickly deteriorate. Moreover, real-world, web-scale datasets contain hundreds of millions or even billions of lines of text, where the existing text cleaning tools are prohibitively expensive to execute over and may require an overhead to learn the corrections. In this paper, we present FLAN, a scalable randomized algorithm to clean and canonicalize massive text data. Our algorithm relies on the Jaccard similarity between words to suggest correction results. We efficiently handle the pairwise word-to-word comparisons via Locality Sensitive Hashing (LSH). We also propose a novel stabilization process to address the issue of hash collisions between dissimilar words, which is a consequence of the randomized nature of LSH and is exacerbated by the massive scale of real-world datasets. Compared with existing approaches, our method is more efficient, both asymptotically and in empirical evaluations, and does not rely on additional features, such as lexical/phonetic similarity or word embedding features. In addition, FLAN does not require any annotated data or supervised learning. We further theoretically show the robustness of our algorithm with upper bounds on the false positive and false negative rates of corrections. Our experimental results on real-world datasets demonstrate the efficiency and efficacy of FLAN.

翻译:在自然语言处理和数据挖掘中,许多流行的机器学习技术严重依赖高质量的文本源。然而,现实世界文本数据集包含大量拼写错误和不适当标的变体,这些模型的性能会迅速恶化。此外,真实世界、网络规模的数据集包含数亿甚至数十亿行的文本,而现有的文本清理工具执行起来费用极其昂贵,可能需要一个间接费用来学习校正。在本文中,我们提供了FLAN,一种可缩放的随机算法,用于清理大规模文本数据,并能够将大量文本数据化。我们的算法依靠 Jaccar 相似的字典来建议更正结果。我们通过LShing(LSH)有效地处理对对词比较。我们还提议了一个新的稳定进程,以解决不一致的词串联的问题,这是LSH随机性的后果,并且由于真实世界数据集的庞大规模而加剧。与现有的方法相比,我们的方法更有效率,既具有虚拟的,又具有模拟性的,在实验性评估中也具有相似的校正性,我们并不需要额外的数据学习或直观性。