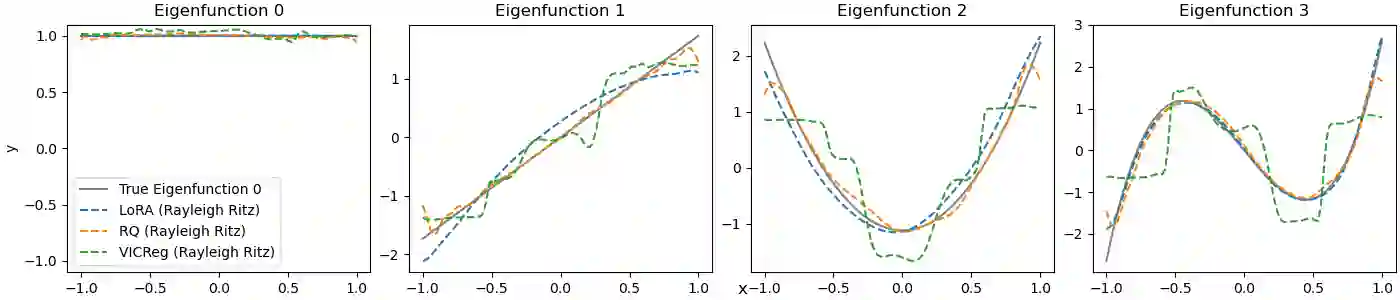

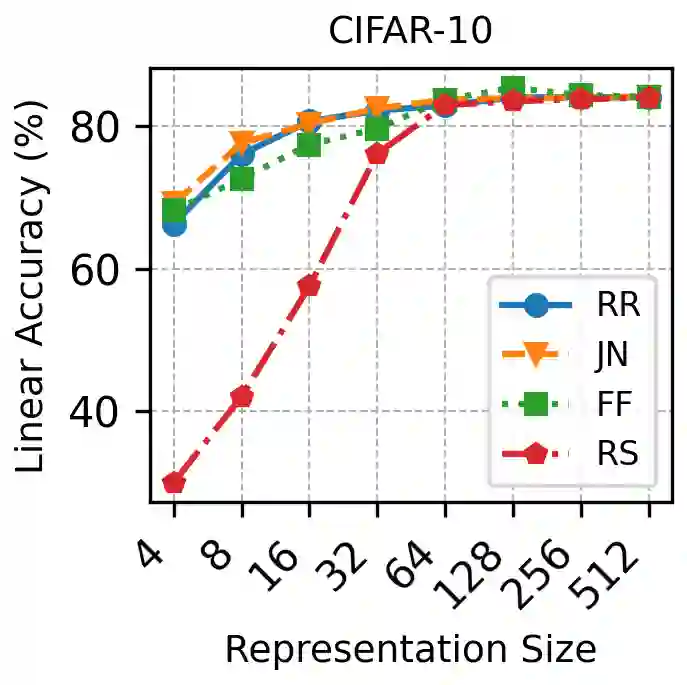

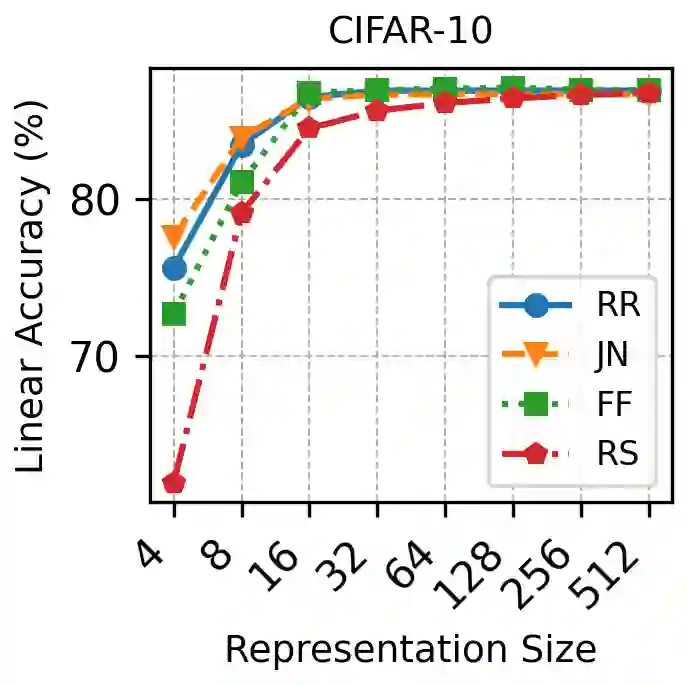

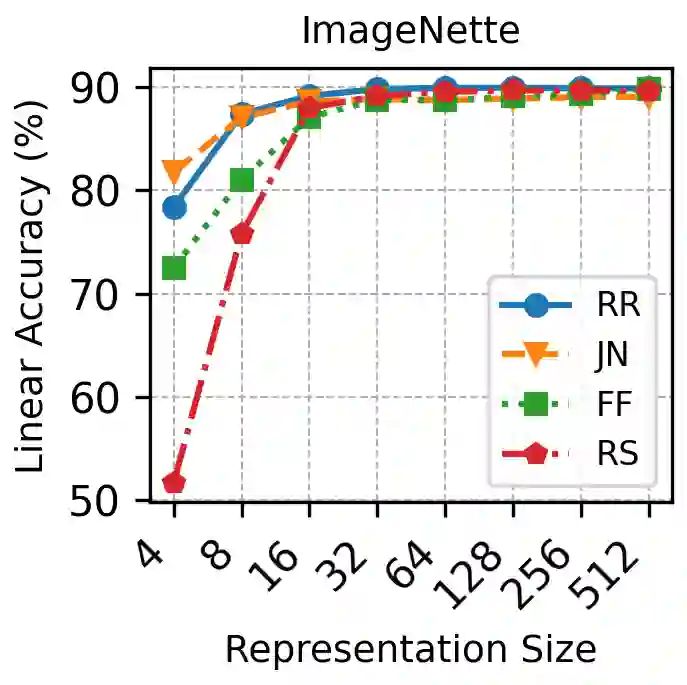

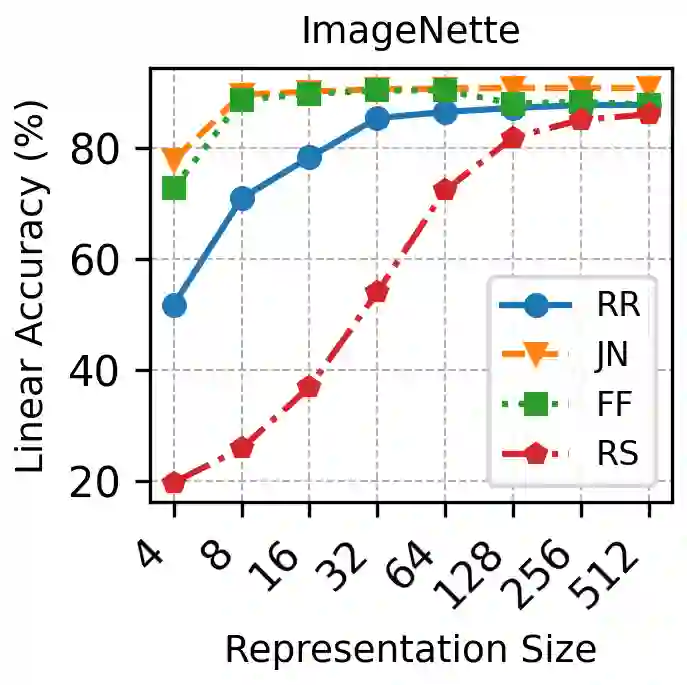

Recent advances in representation learning reveal that widely used objectives, such as contrastive and non-contrastive, implicitly perform spectral decomposition of a contextual kernel, induced by the relationship between inputs and their contexts. Yet, these methods recover only the linear span of top eigenfunctions of the kernel, whereas exact spectral decomposition is essential for understanding feature ordering and importance. In this work, we propose a general framework to extract ordered and identifiable eigenfunctions, based on modular building blocks designed to satisfy key desiderata, including compatibility with the contextual kernel and scalability to modern settings. We then show how two main methodological paradigms, low-rank approximation and Rayleigh quotient optimization, align with this framework for eigenfunction extraction. Finally, we validate our approach on synthetic kernels and demonstrate on real-world image datasets that the recovered eigenvalues act as effective importance scores for feature selection, enabling principled efficiency-accuracy tradeoffs via adaptive-dimensional representations.

翻译:近期表示学习的研究进展表明,广泛使用的目标函数(如对比式与非对比式方法)隐式地执行了由输入与其上下文关系所诱导的上下文核的谱分解。然而,这些方法仅能恢复核函数顶部特征函数的线性张成空间,而精确的谱分解对于理解特征排序与重要性至关重要。本文提出一种通用框架,用于提取有序且可识别的特征函数,该框架基于模块化构建块设计,以满足关键需求,包括与上下文核的兼容性以及适应现代场景的可扩展性。随后,我们阐明两种主要方法范式——低秩近似与瑞利商优化——如何在该特征函数提取框架下实现统一。最后,我们在合成核上验证了所提方法的有效性,并在真实世界图像数据集上证明,所恢复的特征值可作为特征选择的有效重要性评分,从而通过自适应维度表示实现原则性的效率-精度权衡。