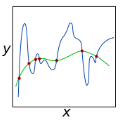

Adding entropic regularization to Optimal Transport (OT) problems has become a standard approach for designing efficient and scalable solvers. However, regularization introduces a bias from the true solution. To mitigate this bias while still benefiting from the acceleration provided by regularization, a natural solver would adaptively decrease the regularization as it approaches the solution. Although some algorithms heuristically implement this idea, their theoretical guarantees and the extent of their acceleration compared to using a fixed regularization remain largely open. In the setting of semi-discrete OT, where the source measure is continuous and the target is discrete, we prove that decreasing the regularization can indeed accelerate convergence. To this end, we introduce DRAG: Decreasing (entropic) Regularization Averaged Gradient, a stochastic gradient descent algorithm where the regularization decreases with the number of optimization steps. We provide a theoretical analysis showing that DRAG benefits from decreasing regularization compared to a fixed scheme, achieving an unbiased $\mathcal{O}(1/t)$ sample and iteration complexity for both the OT cost and the potential estimation, and a $\mathcal{O}(1/\sqrt{t})$ rate for the OT map. Our theoretical findings are supported by numerical experiments that validate the effectiveness of DRAG and highlight its practical advantages.

翻译:在最优传输问题中添加熵正则化已成为设计高效可扩展求解器的标准方法。然而,正则化会引入与真实解之间的偏差。为在保持正则化加速优势的同时减小该偏差,一种自然的求解器应在接近解时自适应地降低正则化强度。尽管已有算法启发式地实现了这一思想,但其理论保证以及与固定正则化方法相比的加速程度仍很大程度上尚未明确。在半离散最优传输(源测度为连续、目标测度为离散)的设定下,我们证明降低正则化确实能够加速收敛。为此,我们提出DRAG算法:递减(熵)正则化平均梯度法,这是一种正则化强度随优化步数递减的随机梯度下降算法。我们通过理论分析表明,与固定正则化方案相比,DRAG受益于递减正则化机制,在OT成本与势函数估计上均实现了无偏的$\mathcal{O}(1/t)$样本与迭代复杂度,并在OT映射估计上达到$\mathcal{O}(1/\sqrt{t})$收敛速率。数值实验验证了DRAG的有效性并凸显其实际优势,为理论发现提供了支持。