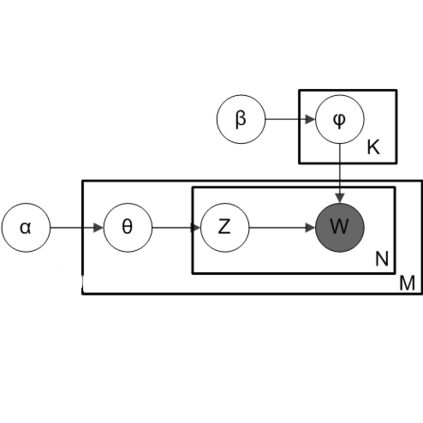

Traditional topic models such as Latent Dirichlet Allocation (LDA) have been widely used to uncover latent structures in text corpora, but they often struggle to integrate auxiliary information such as metadata, user attributes, or document labels. These limitations restrict their expressiveness, personalization, and interpretability. To address this, we propose nnLDA, a neural-augmented probabilistic topic model that dynamically incorporates side information through a neural prior mechanism. nnLDA models each document as a mixture of latent topics, where the prior over topic proportions is generated by a neural network conditioned on auxiliary features. This design allows the model to capture complex nonlinear interactions between side information and topic distributions that static Dirichlet priors cannot represent. We develop a stochastic variational Expectation-Maximization algorithm to jointly optimize the neural and probabilistic components. Across multiple benchmark datasets, nnLDA consistently outperforms LDA and Dirichlet-Multinomial Regression in topic coherence, perplexity, and downstream classification. These results highlight the benefits of combining neural representation learning with probabilistic topic modeling in settings where side information is available.

翻译:暂无翻译