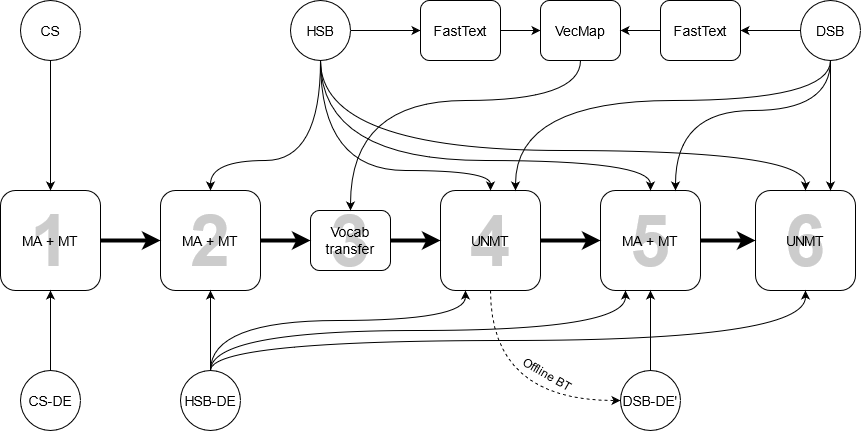

This paper describes the methods behind the systems submitted by the University of Groningen for the WMT 2021 Unsupervised Machine Translation task for German--Lower Sorbian (DE--DSB): a high-resource language to a low-resource one. Our system uses a transformer encoder-decoder architecture in which we make three changes to the standard training procedure. First, our training focuses on two languages at a time, contrasting with a wealth of research on multilingual systems. Second, we introduce a novel method for initializing the vocabulary of an unseen language, achieving improvements of 3.2 BLEU for DE$\rightarrow$DSB and 4.0 BLEU for DSB$\rightarrow$DE. Lastly, we experiment with the order in which offline and online back-translation are used to train an unsupervised system, finding that using online back-translation first works better for DE$\rightarrow$DSB by 2.76 BLEU. Our submissions ranked first (tied with another team) for DSB$\rightarrow$DE and third for DE$\rightarrow$DSB.

翻译:本文介绍了格罗宁根大学为2021年德国-Lower Sorbian(DE-DSB)的无监督机器翻译任务(DE-DSB)提交的系统背后的方法:一种高资源语言,是一种低资源语言。我们的系统使用一个变压器编码解码器结构,对标准培训程序进行三次修改。首先,我们的培训侧重于两种语言,这与对多种语言系统的大量研究形成对比。第二,我们引入了一种新颖的方法,用于启动一种看不见语言的词汇,改进了3.2 BLEU, 用于德国-Lightrorror$DSB, 和4.0 BLEU用于DSB$\rightrowrorrown$\rightrown$DSB。最后,我们试验了使用离线和在线回译法来培训一个不受监督的系统所使用的顺序,发现使用在线背译首先对DE$rightrowrowr$DSB产生更好的效果。我们提交的资料(与另一个团队合作)用于DSBDSB的排名第一(DSB)。