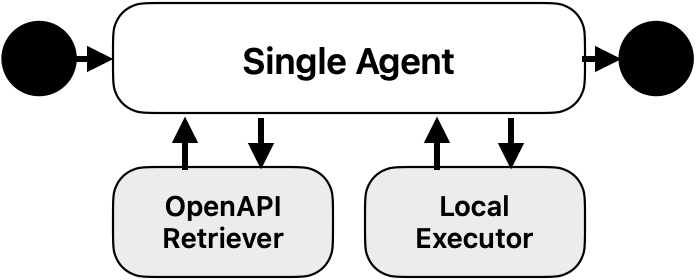

Representational State Transfer (REST) APIs are a cornerstone of modern cloud native systems. Ensuring their reliability demands automated test suites that exercise diverse and boundary level behaviors. Nevertheless, designing such test cases remains a challenging and resource intensive endeavor. This study extends prior work on Large Language Model (LLM) based test amplification by evaluating single agent and multi agent configurations across four additional cloud applications. The amplified test suites maintain semantic validity with minimal human intervention. The results demonstrate that agentic LLM systems can effectively generalize across heterogeneous API architectures, increasing endpoint and parameter coverage while revealing defects. Moreover, a detailed analysis of computational cost, runtime, and energy consumption highlights trade-offs between accuracy, scalability, and efficiency. These findings underscore the potential of LLM driven test amplification to advance the automation and sustainability of REST API testing in complex cloud environments.

翻译:暂无翻译