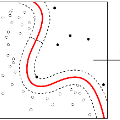

Effective log anomaly detection is critical to sustaining reliability in large-scale IT infrastructures. Transformer-based models require substantial resources and labeled data, exacerbating the cold-start problem in target domains where logs are scarce. Existing cross-domain methods leverage source logs but struggle with generalization due to reliance on surface lexical similarity, failing to capture latent semantic equivalence amid structural divergences. To address this, we propose LogICL, a framework distilling Large Language Model (LLM) reasoning into a lightweight encoder for cross-domain anomaly detection. During training, LogICL constructs a delta matrix measuring the utility of demonstrations selected via Maximal Marginal Relevance relative to zero-shot inference. The encoder is optimized via a multi-objective loss comprising an ICL-Guided term that aligns representations based on reasoning assistance utility, maximum mean discrepancy for domain alignment, and supervised contrastive loss. At inference, the optimized encoder retrieves reasoning-aware demonstrations using semantic similarity and delta scores, enabling frozen-LLM in-context learning with Chain-of-Thought for accurate and interpretable detection. Experiments on few-shot and zero-shot cross-domain benchmarks confirm LogICL achieves state-of-the-art performance across heterogeneous systems. Further analysis via visualizations and case studies confirms LogICL bridges the semantic gap beyond surface lexical similarity, effectively capturing latent semantic equivalence for rapid deployment.

翻译:有效的日志异常检测对于维持大规模IT基础设施的可靠性至关重要。基于Transformer的模型需要大量资源和标注数据,加剧了目标领域因日志稀缺而产生的冷启动问题。现有的跨领域方法利用源领域日志,但由于依赖表层词汇相似性而难以泛化,无法在结构差异中捕捉潜在的语义等价性。为解决这一问题,我们提出了LogICL框架,该框架将大语言模型(LLM)的推理能力蒸馏至轻量级编码器,用于跨领域异常检测。在训练过程中,LogICL构建了一个增量矩阵,用于衡量通过最大边际相关性选取的演示样例相对于零样本推理的效用。编码器通过多目标损失函数进行优化,该损失函数包含:基于推理辅助效用对齐表示的ICL引导项、用于领域对齐的最大均值差异以及监督对比损失。在推理阶段,优化后的编码器利用语义相似性和增量分数检索具有推理感知的演示样例,从而实现基于思维链的冻结LLM上下文学习,以获得准确且可解释的检测结果。在少样本和零样本跨领域基准测试上的实验证实,LogICL在异构系统中实现了最先进的性能。通过可视化和案例研究的进一步分析表明,LogICL能够超越表层词汇相似性,有效捕捉潜在的语义等价性,从而支持快速部署。