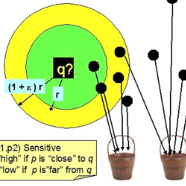

We present a universal framework for similarity-preserving encodings that subsumes all discrete, continuous, algebraic, and learned similarity methods under a single theoretical umbrella. By formulating similarity as functional witness projection over monoids, we prove that \[ O\!\left(\frac{1}{Δ^{2}}\log N\right) \] encoding complexity with ranking preservation holds for arbitrary algebraic structures. This unification reveals that Bloom filters, Locality Sensitive Hashing (LSH), Count-Min sketches, Random Fourier Features, and Transformer attention kernels are instances of the same underlying mechanism. We provide complete proofs with explicit constants under 4-wise independent hashing, handle heavy-tailed witnesses via normalization and clipping, and prove \[ O(\log N) \] complexity for all major similarity methods from 1970-2024. We give explicit constructions for Boolean, Natural, Real, Tropical, and Product monoids, prove tight concentration bounds, and demonstrate compositional properties enabling multi-primitive similarity systems.

翻译:暂无翻译