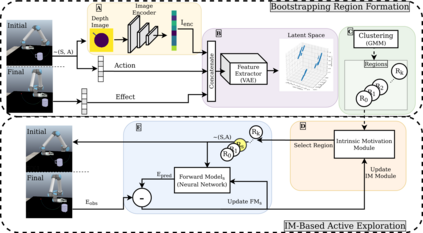

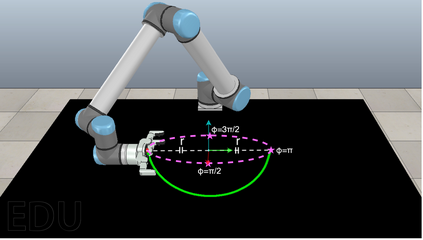

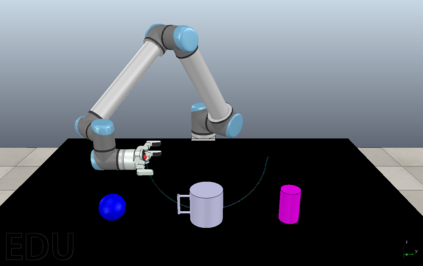

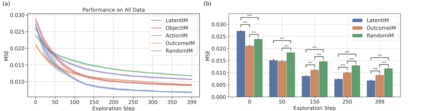

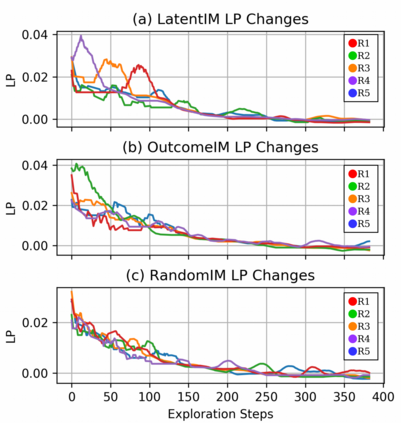

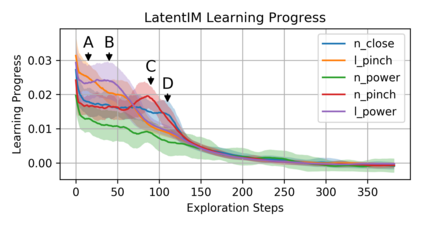

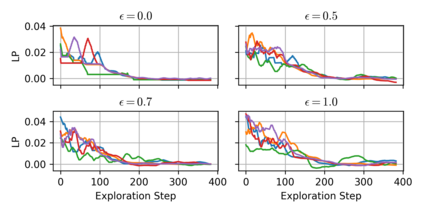

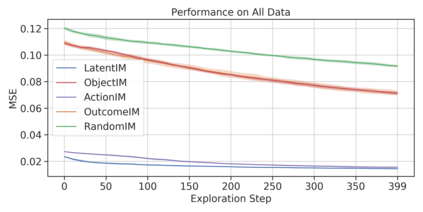

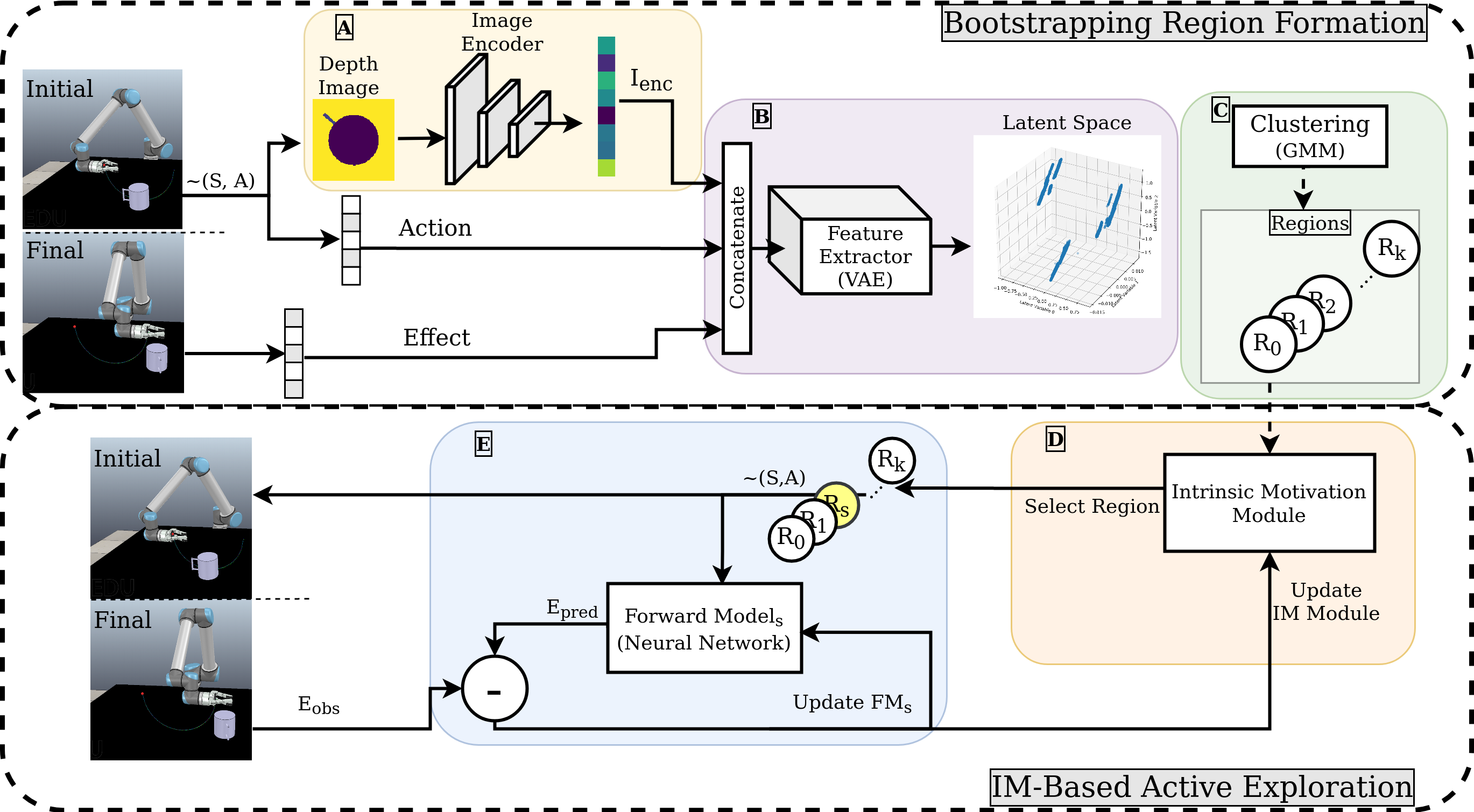

One effective approach for equipping artificial agents with sensorimotor skills is to use self-exploration. To do this efficiently is critical, as time and data collection are costly. In this study, we propose an exploration mechanism that blends action, object, and action outcome representations into a latent space, where local regions are formed to host forward model learning. The agent uses intrinsic motivation to select the forward model with the highest learning progress to adopt at a given exploration step. This parallels how infants learn, as high learning progress indicates that the learning problem is neither too easy nor too difficult in the selected region. The proposed approach is validated with a simulated robot in a table-top environment. The simulation scene comprises a robot and various objects, where the robot interacts with one of them each time using a set of parameterized actions and learns the outcomes of these interactions. With the proposed approach, the robot organizes its curriculum of learning as in existing intrinsic motivation approaches and outperforms them in learning speed. Moreover, the learning regime demonstrates features that partially match infant development; in particular, the proposed system learns to predict the outcomes of different skills in a staged manner.

翻译:使人工剂具备感官模具技能的有效方法之一是使用自我探索。 要高效地做到这一点, 时间和数据收集费用昂贵。 在本研究中, 我们提议了一个探索机制, 将动作、 物体和动作结果的表示方式混合到一个潜在的空间, 形成本地区域以容纳前方模式学习。 该代理使用内在动机选择具有最高学习进步的远期模型, 在某个探索步骤中采用。 这与婴儿学习方式相似, 因为高学习进展表明在选定区域学习问题并非太容易或太困难。 提议的方法在桌面环境中由模拟机器人验证。 模拟场景由机器人和各种物体组成, 机器人每次使用一组参数化的行动与其中的一个进行互动, 并学习这些互动的结果。 采用拟议方法, 机器人按照现有的内在动机方法来组织其学习课程, 并在学习速度上超越它们。 此外, 学习机制展示了部分与婴儿发育相匹配的特点; 特别是, 拟议的系统学会以分阶段的方式预测不同技能的结果 。