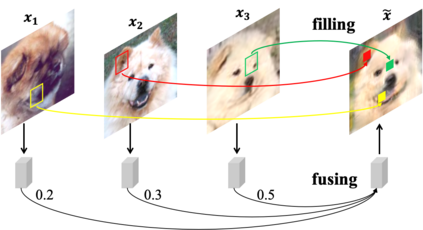

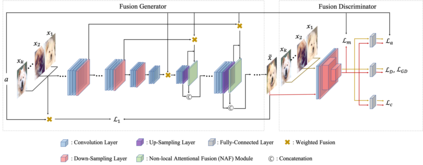

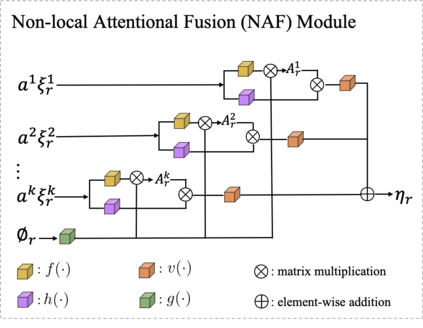

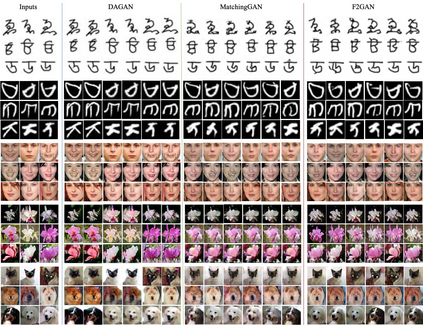

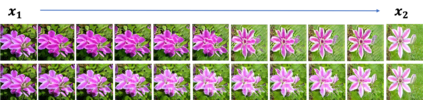

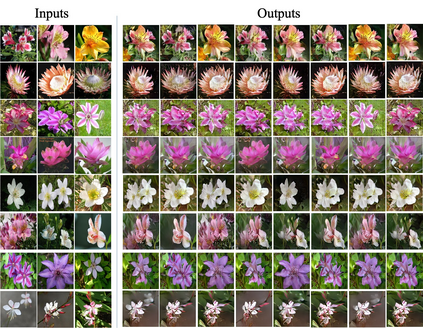

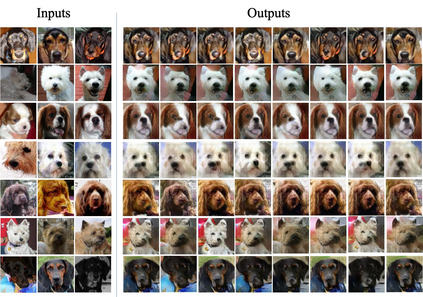

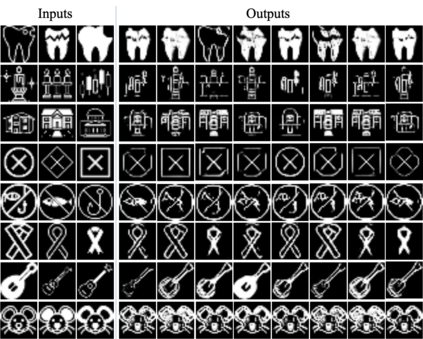

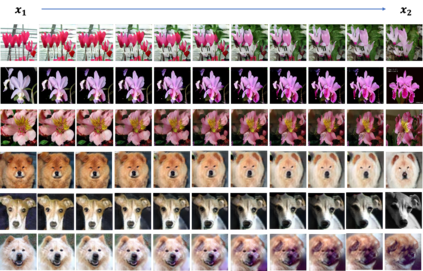

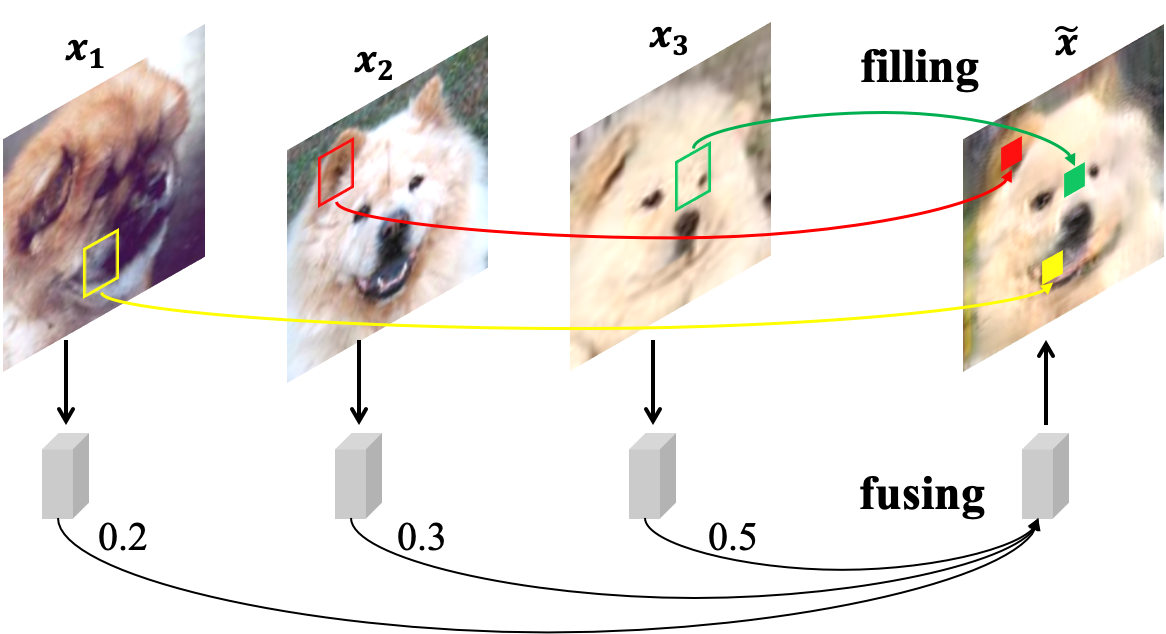

In order to generate images for a given category, existing deep generative models generally rely on abundant training images. However, extensive data acquisition is expensive and fast learning ability from limited data is necessarily required in real-world applications. Also, these existing methods are not well-suited for fast adaptation to a new category. Few-shot image generation, aiming to generate images from only a few images for a new category, has attracted some research interest. In this paper, we propose a Fusing-and-Filling Generative Adversarial Network (F2GAN) to generate realistic and diverse images for a new category with only a few images. In our F2GAN, a fusion generator is designed to fuse the high-level features of conditional images with random interpolation coefficients, and then fills in attended low-level details with non-local attention module to produce a new image. Moreover, our discriminator can ensure the diversity of generated images by a mode seeking loss and an interpolation regression loss. Extensive experiments on five datasets demonstrate the effectiveness of our proposed method for few-shot image generation.

翻译:为了为某一类别生成图像,现有的深层基因模型一般依赖丰富的培训图像;然而,在现实世界应用中,大量的数据采集费用昂贵,需要从有限数据快速学习的能力;此外,这些现有方法不适合快速适应新的类别;很少发光的图像生成,目的是为一个新类别仅从几幅图像中生成图像,吸引了一些研究兴趣;在本文件中,我们建议建立一个引信和引信生成生成生成生成生成的生成反转网络(F2GAN),为一个新类别生成现实而多样的图像,只有少量图像;在我们的F2GAN中,设计了一个聚变生成器,将有条件图像的高级特征与随机内插系数结合起来,然后与非本地关注模块一起填充低层细节,以生成新的图像。此外,我们的导变器可以确保通过一种寻求损失和内插回归损失的方式生成的图像的多样性。对五套数据集进行广泛的实验,展示了我们提议的生成几发图像的方法的有效性。