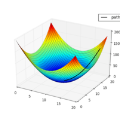

We lay the theoretical foundation for automating optimizer design in gradient-based learning. Based on the greedy principle, we formulate the problem of designing optimizers as maximizing the instantaneous decrease in loss. By treating an optimizer as a function that translates loss gradient signals into parameter motions, the problem reduces to a family of convex optimization problems over the space of optimizers. Solving these problems under various constraints not only recovers a wide range of popular optimizers as closed-form solutions, but also produces the optimal hyperparameters of these optimizers with respect to the problems at hand. This enables a systematic approach to design optimizers and tune their hyperparameters according to the gradient statistics that are collected during the training process. Furthermore, this optimization of optimization can be performed dynamically during training.

翻译:我们为梯度学习中优化器设计的自动化奠定了理论基础。基于贪心原则,我们将设计优化器的问题形式化为最大化损失函数的瞬时下降。通过将优化器视为将损失梯度信号转换为参数运动的函数,该问题可简化为在优化器空间上的一系列凸优化问题。在不同约束条件下求解这些问题,不仅能够以闭式解的形式恢复多种常用优化器,还能针对当前问题得出这些优化器的最优超参数。这为根据训练过程中收集的梯度统计信息,系统化地设计优化器并调整其超参数提供了方法。此外,这种对优化的优化过程可在训练期间动态执行。