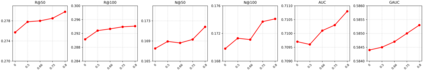

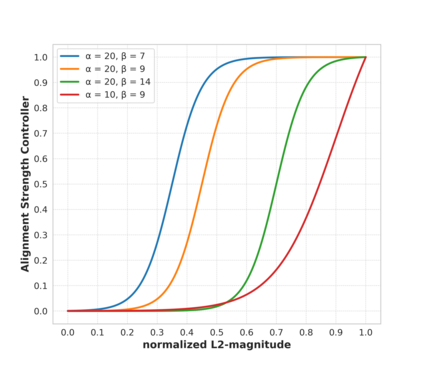

Industrial recommender systems rely on unique Item Identifiers (ItemIDs). However, this method struggles with scalability and generalization in large, dynamic datasets that have sparse long-tail data. Content-based Semantic IDs (SIDs) address this by sharing knowledge through content quantization. However, by ignoring dynamic behavioral properties, purely content-based SIDs have limited expressive power. Existing methods attempt to incorporate behavioral information but overlook a critical distinction: unlike relatively uniform content features, user-item interactions are highly skewed and diverse, creating a vast information gap in quality and quantity between popular and long-tail items. This oversight leads to two critical limitations: (1) Noise Corruption: Indiscriminate behavior-content alignment allows collaborative noise from long-tail items to corrupt their content representations, leading to the loss of critical multimodal information. (2)Signal Obscurity: The equal-weighting scheme for SIDs fails to reflect the varying importance of different behavioral signals, making it difficult for downstream tasks to distinguish important SIDs from uninformative ones. To tackle these issues, we propose a mixture-of-quantization framework, MMQ-v2, to adaptively Align, Denoise, and Amplify multimodal information from content and behavior modalities for semantic IDs learning. The semantic IDs generated by this framework named ADA-SID. It introduces two innovations: an adaptive behavior-content alignment that is aware of information richness to shield representations from noise, and a dynamic behavioral router to amplify critical signals by applying different weights to SIDs. Extensive experiments on public and large-scale industrial datasets demonstrate ADA-SID's significant superiority in both generative and discriminative recommendation tasks.

翻译:暂无翻译