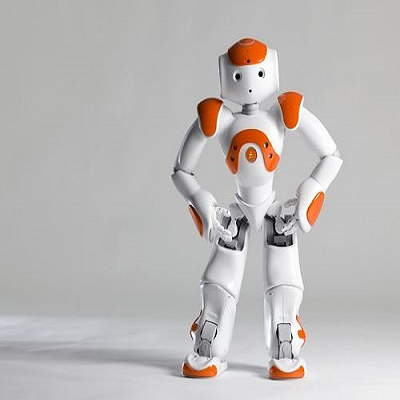

Omnia presents a synthetic data driven pipeline to accelerate the training, validation, and deployment readiness of militarized humanoids. The approach converts first-person spatial observations captured from point-of-view recordings, smart glasses, augmented reality headsets, and spatial browsing workflows into scalable, mission-specific synthetic datasets for humanoid autonomy. By generating large volumes of high-fidelity simulated scenarios and pairing them with automated labeling and model training, the pipeline enables rapid iteration on perception, navigation, and decision-making capabilities without the cost, risk, or time constraints of extensive field trials. The resulting datasets can be tuned quickly for new operational environments and threat conditions, supporting both baseline humanoid performance and advanced subsystems such as multimodal sensing, counter-detection survivability, and CBRNE-relevant reconnaissance behaviors. This work targets faster development cycles and improved robustness in complex, contested settings by exposing humanoid systems to broad scenario diversity early in the development process.

翻译:Omnia提出了一种基于合成数据的流水线,旨在加速军用仿人机器人的训练、验证与部署就绪进程。该方法将第一人称空间观测数据——包括视角录制、智能眼镜、增强现实头显及空间浏览工作流捕获的信息——转化为可扩展的、面向特定任务的仿人机器人自主性合成数据集。通过生成大量高保真模拟场景,并结合自动化标注与模型训练,该流水线能够在无需承担大规模实地试验的成本、风险或时间限制的前提下,快速迭代感知、导航与决策能力。所得数据集可针对新的作战环境与威胁条件进行快速调整,既支持仿人机器人的基础性能,也适用于多模态感知、反探测生存能力及与化学、生物、放射、核、爆炸物(CBRNE)相关的侦察行为等先进子系统。本研究旨在通过让仿人机器人在开发早期广泛接触多样化场景,实现更短的开发周期,并提升其在复杂对抗环境中的鲁棒性。