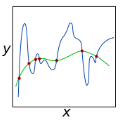

Offline reinforcement learning often relies on behavior regularization that enforces policies to remain close to the dataset distribution. However, such approaches fail to distinguish between high-value and low-value actions in their regularization components. We introduce Guided Flow Policy (GFP), which couples a multi-step flow-matching policy with a distilled one-step actor. The actor directs the flow policy through weighted behavior cloning to focus on cloning high-value actions from the dataset rather than indiscriminately imitating all state-action pairs. In turn, the flow policy constrains the actor to remain aligned with the dataset's best transitions while maximizing the critic. This mutual guidance enables GFP to achieve state-of-the-art performance across 144 state and pixel-based tasks from the OGBench, Minari, and D4RL benchmarks, with substantial gains on suboptimal datasets and challenging tasks. Webpage: https://simple-robotics.github.io/publications/guided-flow-policy/

翻译:离线强化学习通常依赖于行为正则化方法,强制策略保持接近数据集分布。然而,这类方法在其正则化组件中未能区分高价值与低价值动作。本文提出引导流策略(GFP),它将多步流匹配策略与蒸馏的一步执行器相耦合。该执行器通过加权行为克隆引导流策略,专注于克隆数据集中的高价值动作,而非不加区分地模仿所有状态-动作对。反过来,流策略约束执行器在最大化评论家价值的同时,保持与数据集中最佳转移的对齐。这种相互引导机制使GFP在OGBench、Minari和D4RL基准测试的144个基于状态和像素的任务中实现了最先进的性能,在次优数据集和挑战性任务上取得了显著提升。项目页面:https://simple-robotics.github.io/publications/guided-flow-policy/