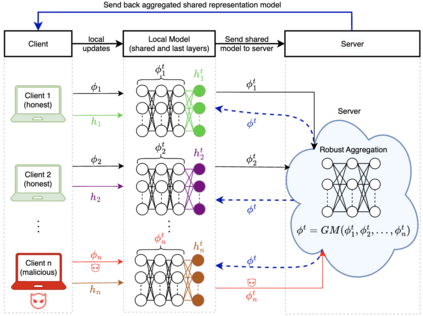

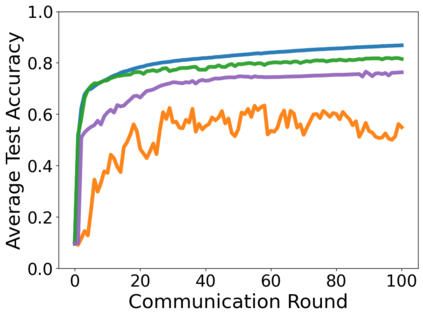

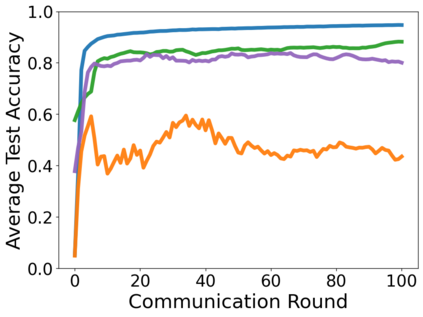

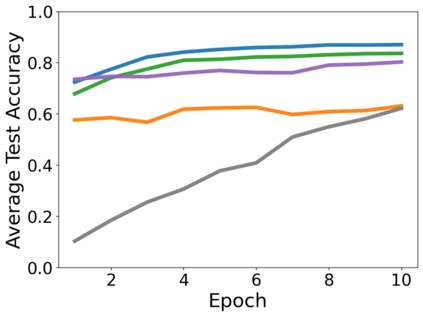

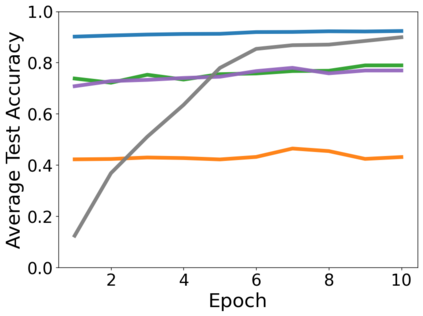

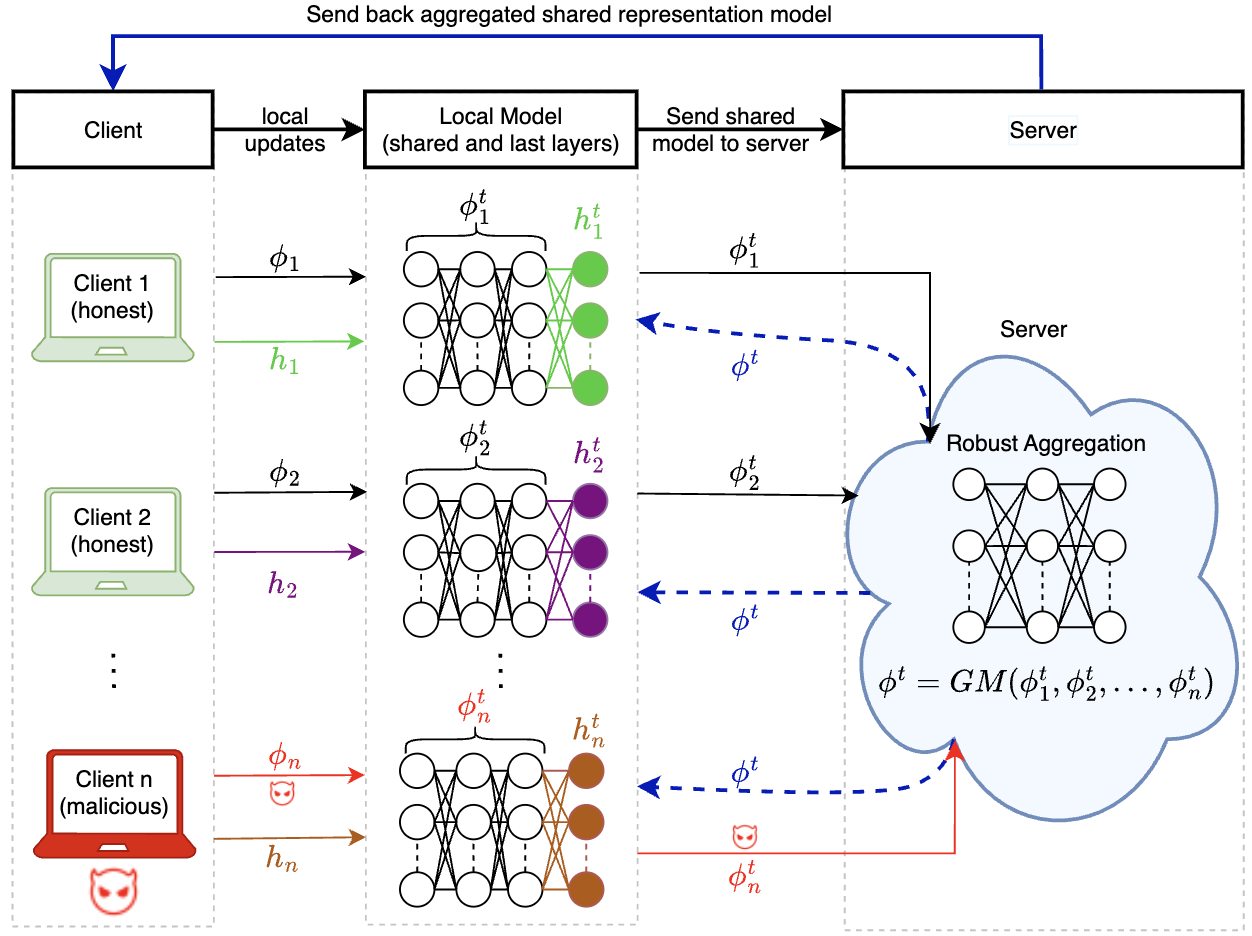

In this paper, we propose BR-MTRL, a Byzantine-resilient multi-task representation learning framework that handles faulty or malicious agents. Our approach leverages representation learning through a shared neural network model, where all clients share fixed layers, except for a client-specific final layer. This structure captures shared features among clients while enabling individual adaptation, making it a promising approach for leveraging client data and computational power in heterogeneous federated settings to learn personalized models. To learn the model, we employ an alternating gradient descent strategy: each client optimizes its local model, updates its final layer, and sends estimates of the shared representation to a central server for aggregation. To defend against Byzantine agents, we employ two robust aggregation methods for client-server communication, Geometric Median and Krum. Our method enables personalized learning while maintaining resilience in distributed settings. We implemented the proposed algorithm in a federated testbed built using Amazon Web Services (AWS) platform and compared its performance with various benchmark algorithms and their variations. Through experiments using real-world datasets, including CIFAR-10 and FEMNIST, we demonstrated the effectiveness and robustness of our approach and its transferability to new unseen clients with limited data, even in the presence of Byzantine adversaries.

翻译:暂无翻译