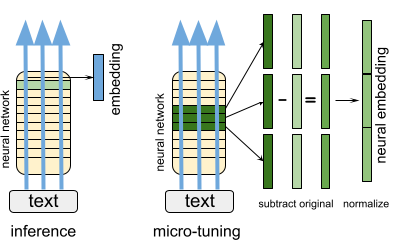

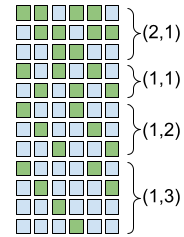

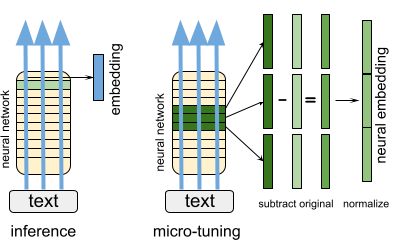

We propose a new kind of embedding for natural language text that deeply represents semantic meaning. Standard text embeddings use the vector output of a pretrained language model. In our method, we let a language model learn from the text and then literally pick its brain, taking the actual weights of the model's neurons to generate a vector. We call this representation of the text a neural embedding. The technique may generalize beyond text and language models, but we first explore its properties for natural language processing. We compare neural embeddings with GPT sentence (SGPT) embeddings on several datasets. We observe that neural embeddings achieve comparable performance with a far smaller model, and the errors are different.

翻译:我们提议了一种新型的自然语言文本嵌入方式,这种语言深度代表语义含义。标准文本嵌入方式使用了预先训练的语言模式的矢量输出。 在我们的方法中,我们让一个语言模式从文本中学习,然后用模型神经元的实际重量来选择它的大脑来生成一个矢量。我们把这个文本的表达方式称为神经嵌入方式。这种技术可能超越文本和语言模式的范围,但我们首先探索自然语言处理的特性。我们把神经嵌入方式与GPT句(SGPT)的嵌入方式相比较,我们发现神经嵌入方式的性能与一个小得多的模型相当,错误是不同的。