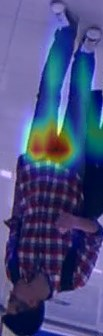

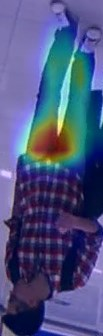

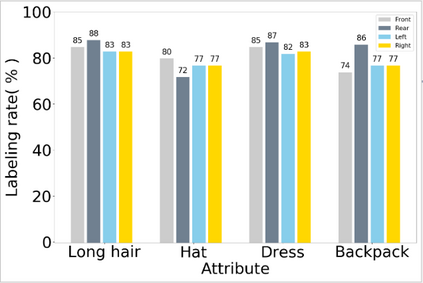

Pedestrian attribute recognition in surveillance scenarios is still a challenging task due to inaccurate localization of specific attributes. In this paper, we propose a novel view-attribute localization method based on attention (VALA), which relies on the strong relevance between attributes and views to capture specific view-attributes and to localize attribute-corresponding areas by attention mechanism. A specific view-attribute is composed by the extracted attribute feature and four view scores which are predicted by view predictor as the confidences for attribute from different views. View-attribute is then delivered back to shallow network layers for supervising deep feature extraction. To explore the location of a view-attribute, regional attention is introduced to aggregate spatial information of the input attribute feature in height and width direction for constraining the image into a narrow range. Moreover, the inter-channel dependency of view-feature is embedded in the above two spatial directions. An attention attribute-specific region is gained after fining the narrow range by balancing the ratio of channel dependencies between height and width branches. The final view-attribute recognition outcome is obtained by combining the output of regional attention with the view scores from view predictor. Experiments on three wide datasets (RAP, RAPv2, PETA, and PA-100K) demonstrate the effectiveness of our approach compared with state-of-the-art methods.

翻译:由于特定属性的本地化不准确,在监视情景中,对属性属性的识别仍是一项艰巨的任务。在本文件中,我们提出基于关注的新的视图属性定位方法(VALA),该方法依靠属性和视图之间的强烈关联性来捕捉特定的视图属性属性属性,并通过关注机制将属性对应区域本地化。具体的视图属性属性属性由提取的属性特征和四个视图分数组成,这些属性由查看预测者作为不同观点的属性的属性预测而预测,从而成为对属性的信任。然后将视图属性交付到浅端网络层,以监督深度特征提取。为探索一个视图属性定位,将区域对投入属性属性属性的高度和宽度定位的汇总空间信息引入区域关注度,以将图像限制到狭小范围。此外,上述两个空间方向都含有对属性属性的部门间依赖性。通过平衡频道对高度和宽宽度分支之间依赖度的频道比例进行定位,从而获得特定属性区域的注意度区域。最后的视图属性识别结果是通过将区域定位的输出与三度预测方法的实验性、对区域定位的预测结果进行对比。