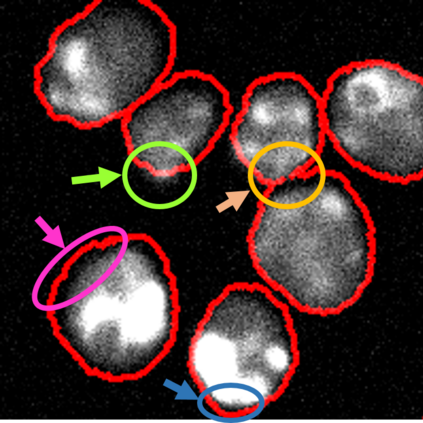

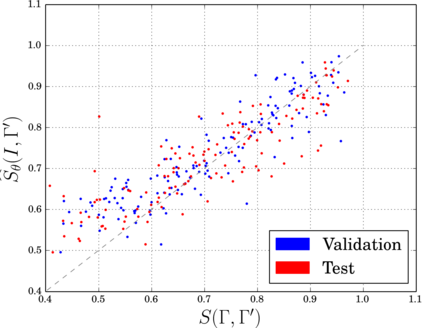

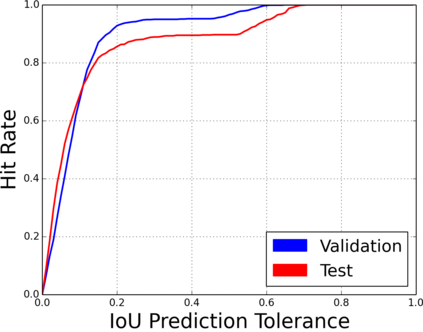

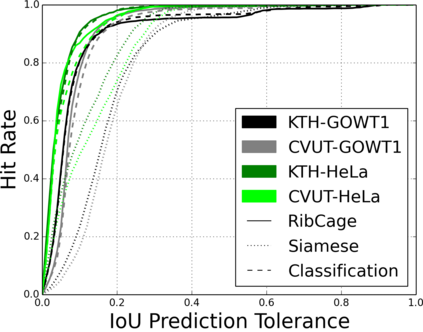

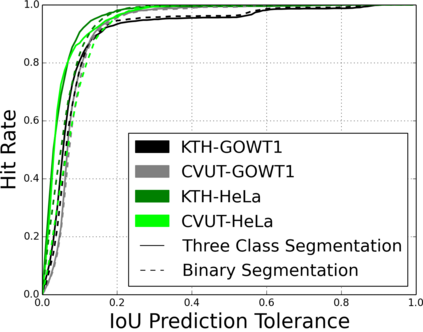

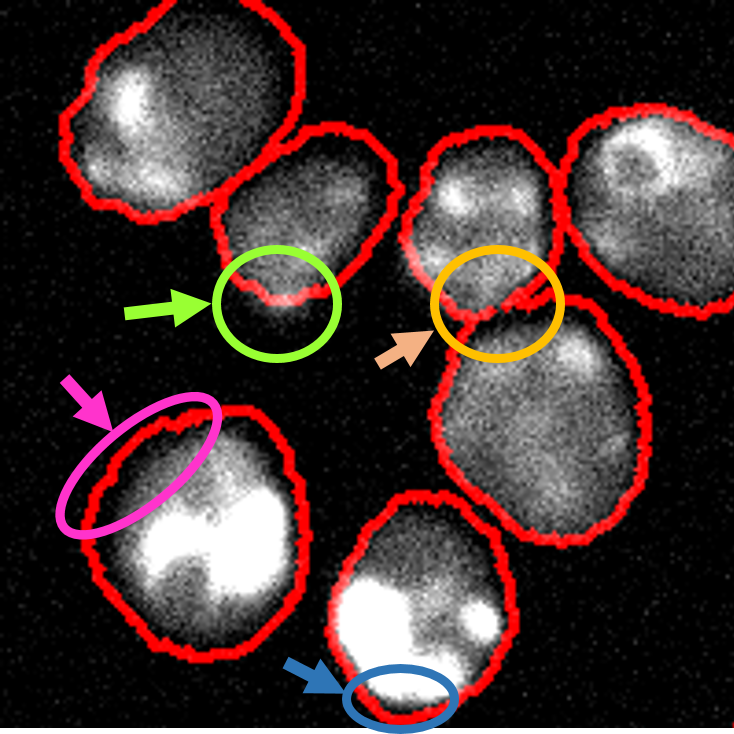

Tools and methods for automatic image segmentation are rapidly developing, each with its own strengths and weaknesses. While these methods are designed to be as general as possible, there are no guarantees for their performance on new data. The choice between methods is usually based on benchmark performance whereas the data in the benchmark can be significantly different than that of the user. We introduce a novel Deep Learning method which, given an image and a corresponding segmentation, obtained by any method including manual annotation, estimates the Intersection over Union measure (IoU) with respect to the unknown ground truth. We refer to this method as a Quality Assurance Network - QANet. The QANet is designed to give the user an estimate of the segmentation quality on the users own, private, data without the need for human inspection or labeling. It is based on the RibCage Network architecture, originally proposed as a discriminator in an adversarial network framework. The QANet was trained on simulated data with synthesized segmentations and was tested on real cell images and segmentations obtained by three different automatic methods as submitted to the Cell Segmentation Benchmark. We show that the QANet's predictions of the IoU scores accurately estimates to the IoU scores evaluated by the benchmark organizers based on the ground truth segmentation.

翻译:自动图像分解工具和方法正在迅速发展,每个工具和方法都有其自身的优缺点。这些方法的设计尽量笼统,但无法保证其新数据的业绩。两种方法的选择通常基于基准性能,而基准中的数据可能与用户的数据大不相同。我们采用了一种新的深层次学习方法,根据图像和相应的分解方法,以包括人工注释在内的任何方法获得的分解图和相应的分解法,根据未知的地面真相,估计跨部分对联盟措施(IOU)的评估。我们把这种方法称为质量保证网络 - QANet。QANet的设计是为了向用户提供用户自己、私人和数据分解质量的估计,而无需进行人类检查或标签。我们采用了RibCage网络结构,最初以对抗性网络框架中的偏差形式提出这一结构。QANet是经过综合分解的模拟数据培训,并以提交细胞分解基准基准的三种自动方法获得的真正细胞图象和分解法进行测试。我们通过对I细胞分解基准的I的I级数据进行了精确的QANet测测算。我们通过对I的I地面分解结果进行了精确的QANet的指数的推算。